Comprehensive Standard 3.3.1

Institutional Effectiveness

The institution identifies expected outcomes, assesses the extent to which it achieves these outcomes, and provides evidence of improvement based on analysis of the results in the following area:

3.3.1.1. educational programs, to include student learning outcomes

Judgment of Compliance: Compliance

Narrative/Justification for Judgment of Compliance:

Executive Summary

At St. Petersburg College (SPC), Institutional Effectiveness is the integrated, systematic, explicit, and documented process of measuring performance against SPC's mission for the purpose of continuously improving the College. Given that student success is the core mission of SPC, developing and measuring effective student learning outcomes is the cornerstone of the Institutional Effectiveness processes. Although there is no single right or best way to measure academic success, improvement, or quality; objectives still must be established, data must be collected and analyzed, and the results of those findings must be used to improve the institution in the future. Operationally, this ensures that the stated purposes of the College are accomplished.

The Institutional Research and Effectiveness division at SPC is comprised of several departments that work in conjunction with one another in regard to academic program assessment: Institutional Research, Curriculum Services, and Academic Effectiveness and Assessment (AEA). Although the AEA oversees the program assessment at SPC, the contributions and support provided to academic programs by each of the other departments helps define specific elements of the integrated process.

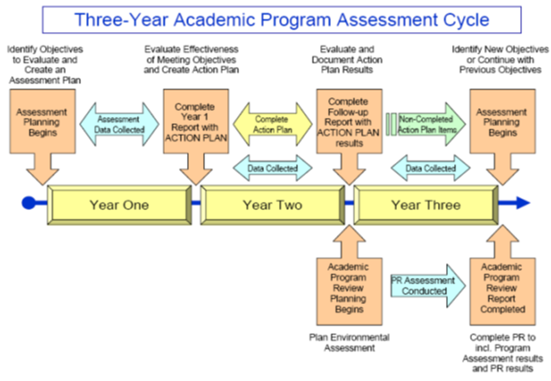

SPC is a Florida State College System institution, and as such, offers programs in a 2+2 model. All active academic programs (currently there are 66 active programs) participate in the three components of the assessment cycle: annual viability reports, triennial program assessment, and triennial comprehensive program review, each with follow up reports. New programs begin with viability reports and then a comprehensive program review to compare original anticipated program growth with actual growth, and allow assessment data to be gathered from its first graduates. An active assessment cycle for any program over a seven-year period would look similar to the following chart:

|

Year 1 |

Year 2 |

Year 3 |

Year 4 |

Year 5 |

Year 6 |

Year 7 |

Viability Report |

X |

X |

X |

X |

X |

X |

X |

Program Assessment |

|

|

X |

|

|

X |

|

Program Assessment Follow Up |

|

|

|

X |

|

|

X |

Comprehensive Program Review |

|

X |

|

|

X |

|

|

Comprehensive Program Review Follow Up |

|

|

X |

|

|

X |

|

It is important to note, that by using a three-year assessment cycle, SPC seeks to exceed external accreditation and state requirements, which require a five-year cycle.

Use of Results to Drive Institutional and Program Improvement

Institutional Improvement

SPC focuses its analysis of results from its three-year assessment cycle to drive both institutional and programmatic improvement. The defined straightforward assessment process ensures a realistic consideration of the intended outcomes that the institution has set and an explicit evaluation of the evidence to determine to what extent the institution is achieving that intent, as well as how the data is driving the improvement plans. While there has been an effective oversight group structure in place to address and review the assessment process since 2005, the College continually looks for ways to improve. The Strategic Issues Committee Structure was established in 2012 in response to the desire to increase the integration of faculty within the institutional accreditation and assessment processes. The new committee structure allows the Strategic Issues Council to be the College-wide communication point for all student learning and accountability initiatives. This includes determining appropriate measures, reviewing the resulting data, and evaluating impact. Three oversight committees, Academic Affairs, Student Support, and Systems Support, begin the process and are charged with the following key assessment tasks:

1. Evaluate whether the institution successfully achieved its desired outcomes from the previous institutional effectiveness and planning cycle,

2. Identify key areas requiring improvement that were identified in the assessment analysis, and

3. Develop strategies

and recommendations to formulate quality improvement initiatives for

the next institutional effectiveness and planning cycle.

The Academic Affairs Committee (formerly the Educational Oversight Committee) is charged with reviewing the annual, aggregated overview of items from all of the Academic Program Assessment Reports (APAR). The development of the APARs intentionally aligned the action plans not only with program learning outcomes but also with specific categorizations of improvement to further identify the intent of the plan’s impact on student success. You can notice this alignment in the examples provided by looking at the reference numbers or identifiers in the plans.

The options to choose from include:

An example of this analysis is shown below (taken from the Outcome Assessment Review Report). The beginning of this report provides an opportunity to update the action items from the prior year.

Assessment Action Items Analysis Results Four areas were identified (or re-identified) as a result of the analysis. These four areas are: 1. Identify models for capturing and disseminating best practices associated with "real world" experiences (practical applications) [From 2010-11: #1] [Coverage Analysis: A] 2. Continue to improve the general education assessment [New Objective: Related 2010-11: #2] [Coverage Analysis: D] 3. Explore ways to continue to promote, assess, and improve critical thinking and student engagement [Revised from 2010-11: #3] [Coverage Analysis: D] 4. Review College curriculum for possible improvements [New Objective: Related to 2010-11: #4] [Coverage Analysis: B] In addition, four areas were identified through discussions with the members of the Academic Affairs Committee. This included: 1. Revise the current College Academic Standing Policy to better align with the Financial Aid SAP policy and implement the Student Life Plan as a retention plan overlay for FTIC students [New Objective] [Coverage Analysis: A/C] 2. Develop a certification program for adjunct instructors [New Objective] [Coverage Analysis: C] 3. Evaluate student experiences in the different modalities [New Objective] [Coverage Analysis: C] 4. Improve the Model for Learning Resources [New Objective] [Coverage Analysis: C] |

After the Academic Affairs Committee reviews the analysis, individual objectives and action steps, which will lead to improvement are developed for each identified area. These recommendations are then presented to the College-wide Strategic Issues Council (formerly the President’s Cabinet). This is an example of an identified objective and action steps from one of the five areas outlined above:

In this way, the assessment process focuses not only on individual program improvement, but on College-wide improvement as well. All full reports are publically available and can be found on the Oversight Groups website, under the Educational Oversight Group header.

Programmatic Improvement

Each of the three components of the assessment cycle, annual viability reports, triennial program assessment, and triennial comprehensive program review, as well as their associated follow up reports, include specific action plans focused upon making programmatic improvements based upon the results being analyzed. All reports can be found on the public Educational Outcomes website.

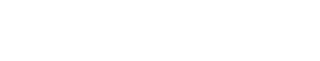

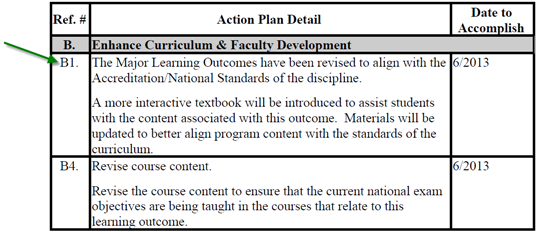

As noted above, the Academic Program Assessment Report aligns its program assessment directly to the Program Learning Outcome (MLO) being assessed and analyzed. The example below is from the AS Early Childhood 2010-11 Follow Up:

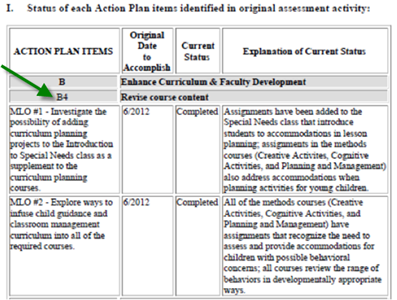

Annual Program Viability Reports algin their action plans to the measure being analyzed. The example below is from the BS Nursing 2011-12 Action Plan:

Comprehensive Academic Program Reviews align their action plans directly to the measure being analyzed. The example below is from the AS Dental Hygiene 2010-11 Action Plan:

All three reporting structures include the creation of action plans, as well as follow up reports on achievement and impact of action items one year later.

Assessment of Student Learning Process

Identification of Program Learning Outcomes

Created with input from faculty, advisory board members, and program administrators in conjunction with the Curriculum Services Department, SPC Program Learning Outcomes (PLOs) clearly articulate the knowledge, skills, and abilities of their graduates. The focus for Associate in Arts degrees is targeted on students continuing to four-year degree programs as opposed to the Associate in Science and Baccalaureate programs, which are targeted towards students seeking employable skills and transfer to graduate programs. Academic outcomes at SPC fall into two categories: general education outcomes and program-specific major learning outcomes. The outcomes are available to students and stakeholders in a variety of ways, including marketing materials, program websites, and within their program philosophy statements (p. 8). For programs that are externally accredited by discipline-specific professional and/or state agencies, programmatic student learning outcomes are closely aligned with those established by the accrediting bodies and, in some instances, they are stated verbatim.

Program-Specific Major Learning Outcomes

Example:

Bachelor of Applied Science in Health Information Management Graduates will: 1. Develop knowledge and skills at the recall, application, and analysis levels in the content area of Health Data Structure, Content, and Standards. 2. Develop knowledge and skills at the recall, application, and analysis levels in the content area of Healthcare Information Requirements and Standards. 3. Develop knowledge and skills at the recall, application, and analysis levels in the content area of Clinical Classification Systems. 4. Develop knowledge and skills at the recall, application, and analysis levels in the content area of Reimbursement Methodologies. 5. Develop knowledge and skills at the recall, application, and analysis levels in the content area of Health Statistics and Research. 6. Develop knowledge and skills at the recall, application, and analysis levels in the content area of Quality Management and Performance Improvement. 7. Develop knowledge and skills at the recall, application, and analysis levels in the content area of Healthcare Delivery Systems. 8. Develop knowledge and skills at the recall, application, and analysis levels in the content area of Healthcare Privacy, Confidentiality, Legal, and Ethical Issues. 9. Develop knowledge and skills at the recall, application, and analysis levels in the content area of Information and Communication Technologies. 10. Develop knowledge and skills at the recall, application, and analysis levels in the content area of Data, Information, and File Structures. 11. Develop knowledge and skills at the recall, application, and analysis levels in the content area of Data Storage and Retrieval. 12. Develop knowledge and skills at the recall, application, and analysis levels in the content area of Data Security. 13. Develop knowledge and skills at the recall, application, and analysis levels in the content area of Human Resources. 14. Develop knowledge and skills at the recall, application, and analysis levels in the content area of Financial and Resource Management. |

General Education Outcomes

The assessment of the general education competencies is designed as a collegial process wherein faculty and program administrators review the general education curriculum, validating the outputs and proficiencies based on the College’s General Education Goals. Attention is focused on producing outcomes and assessment measures that provide evidence that the courses and curriculum meet institutional goals for student learning, as depicted in the Performance Improvement Cycle, used for all program assessment. This process is coordinated and facilitated through the Academic Effectiveness and Assessment (AEA) department.

In 2009, the general education outcomes were revised from an original set of eleven to five broader general education outcomes (shown below) that closely align with the State of Florida’s recommended student general education outcomes (2008-09 Ed Oversight Follow-up Meeting presentation). The new outcomes were established by the Educational Oversight Group (recently renamed the Academic Affairs Committee), which was composed of the general education deans, provosts, senior administrators, student affairs staff, and faculty representing various disciplines (Educational Oversight meeting minutes). The outcomes were then recommended to the President’s cabinet for review (5.11.09 Minutes) and provided to the Board of Trustees for final approval (July 2009 BOT memo).

SPC General Education Outcomes 1. Critical Thinking: Analyze, synthesize, reflect upon, and apply information to solve problems, and make decisions logically, ethically, and creatively 2. Communication: Listen, speak, read, and write effectively 3. Scientific and Quantitative Reasoning: Understand and apply mathematical and scientific principles and methods 4. Information and Technology Fluency: Find, evaluate, organize, and use information using a variety of current technologies and other resources 5. Global Socio-Cultural Responsibility: Participate actively as informed and ethically responsible citizens in social, cultural, global, and environmental matters |

For the past two summers, the Curriculum Services Department designed a Summer Institute for all academic programs to focus on curriculum review, alignment, and revision. The first summer’s focus was reviewing program learning outcomes to determine their relevancy and measurability, as well as creating course sequence curriculum maps that aligned all courses within a degree program to the Program Learning Outcomes (PLOs) at one of three levels: introductory, reinforcement, or enhancement to ensure that students are exposed to an adequate amount of curriculum to achieve the PLOs. The focus of the second summer institute was looking at the connections between chosen programmatic assessments and student learning progression towards PLO attainment.

These institutes support the development and use of the new CurricUNet curriculum development tool adopted by SPC during the 2012-13 academic year. All new programs and courses, as well as revisions to existing programs and courses take place within this online system, and one of the key steps within the process is to develop or review program learning outcomes and their associations to student learning progression through the program. Thus the review of PLOs has become an ongoing, embedded, continuous process at SPC.

Outcomes Assessment

The assessment process is managed by Academic Effectiveness and Assessment (AEA), and the reports are housed within the Educational Outcomes Assessment website, which was developed to provide a medium for completing the educational assessment reports, aggregating student learning outcomes across educational programs, and establishing a repository for additional program-specific information, such as employer surveys, recent alumni surveys, program sequencing maps, and program advisory committee documents. The site not only provides program administrators with an online system in which to enter the various elements within the academic program assessment process, but it also allows public access (shown below) to all “completed” assessment reports for active programs dating back to 1999. The public nature of the site further encourages the use of assessment data as well as highlights “best practices” across the College. The following program assessment reports are captured by this system: Academic Program Viability Reports (APVR), Academic Program Assessment Reports (APAR), Follow-up to APAR Reports, Comprehensive Academic Program Review (CAPR), and Follow-up to CAPR Report.

SPC was given authority by the state of Florida and SACS-COC to begin offering baccalaureate level programs in 2001, the first Florida College System institution to be given this opportunity. During the formative years of this transition, all baccalaureate programs were overseen by the Vice President of Baccalaureate Programs and University Partnerships to provide direct guidance and support. Program improvement was focused upon development and growth of the programs, attainment of programmatic accreditation, as well as organization of the program assessment process: alignment of program learning outcomes to courses through sequence mapping and the development of capstone assignments to assess these programmatic outcomes. Student learning outcomes assessment was designed to begin five years after the first semester of enrollment in order to allow time for students to progress through their program and get to their capstone experience. In 2010 the College reorganized its reporting structure of all programs, transferring program responsibility to the appropriate Academic Dean with oversight by the Senior Vice President of Academic and Student Affairs. Whereas previously the VP of Baccalaureate Programs met with each program to discuss program assessment and improvement, captured in annual summaries, the program assessment processes for baccalaureate programs has now been integrated into the Educational Outcomes website under the management of AEA. All previous documentation of program assessment has been uploaded to the website for archival purposes. The attached chart of baccalaureate programs identifies the first term students enrolled in each program, as well as the first year the program had graduates to help identify the program assessment cycle for each program.

Currently, SPC has 66 active programs and 35 inactive programs stored in the Educational Outcomes Assessment site. A quick view of what can be found for active programs in regard to the assessment reports can be seen in the attached spreadsheet, which is maintained by the AEA department.

Annual Program Viability Reports

Annual Program Viability Reports are grouped by academic organization, which includes all academic degrees and certificates within each degree. Each year a set of measures focused on the College’s mission of student success, which are designed to evaluate a program’s viability, is reported. Currently there are seven measures:

1) SSH Enrollment

total number of student

semester hours within the greater academic org, made up of all

associated degree/certificates

2) Unduplicated Headcount

number of

students enrolled in degree/certificate within an academic org and

taking courses associated with that degree/certificate

3)

Performance Metric

divides actual enrollment within an academic org

by the sum of actual Equated Credit Hours offered within that org per

semester

4) Course Success Rate

percent of students completing

courses within the academic org with a grade of A, B, or C, divided

by the total number of students taking courses within the academic

org

5) Program Graduates

separated by degree/certificate

6)

Total Placement

percentage of graduates enlisted in the military,

employed, and/or continuing their education within the first year of

graduation

7) National, State and County Employment Trends

average annual job openings

After reviewing the statistical trends with program faculty and program coordinators, academic Deans follow-up on past year action plans, evaluate the impact of the action plans on program quality, create the next year’s action plan, identify special resources needed, and note area(s) of concern or improvement. All Viability Reports, upon completion, are printed, bound, and shared with the President for an overall College academic program review.

Business Intelligence System

The Annual Program Viability Reports have traditionally been static annual reports with charts provided by Institutional Research. However, SPC recently implemented a business intelligence software solution, “SPC PULSE BI,” in order to provide standardized information to end-users and key stakeholders with the ability to look at data measures through multiple views. The system can quickly filter through “real-time,” management data to address questions and identify potential areas for improvement. Measures (such as student course-level success rates) can be rolled up and viewed at the aggregate institutional level or an end-user can “drill-down” and view the same data measures at the campus or program level. This dynamic system allows College employees the ability to quickly access information required to make decisions to improve student success. A screen shot of the user-friendly interface is shown below.

Though used in many ways throughout the College, it is a future goal for the College that “SPC PULSE BI” will enable end users to gather the necessary data for Academic Program Viability Reports (APVR) themselves, as well as monitor changes to these reports throughout the year to gauge the success of action plan implementations. The Institutional Research department has been in charge of training all administrators and faculty on the use and functionality of this tool.

Academic Program Assessment Reports

Program assessment reports are focused upon evaluation of program learning outcomes or general education outcomes. Assessment reports provide comparisons of present and past results, which are used to identify topics where improvement is possible. SPC has used assessment reports as a vital tool in achieving its commitment to continuous improvement. General education and program-specific academic assessments (titled Academic Program Assessment Reports or APARs) are conducted according to a three-year cycle shown in the diagram below.

Specifically, the Academic Program Assessment Reports consist of the following seven sections:

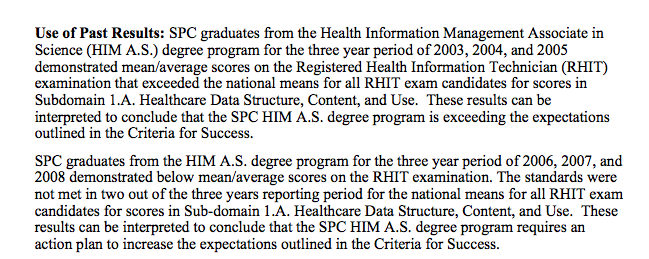

1) Use of Past Results - captures the effects of previous action plans

Sample Language:

2) Major Learning Outcomes - lists the program PLOs

3) Assessment Methodology - defined per PLO

4) Criteria for Success - defined per PLO

5) Summary of Assessment Findings - defined per PLO

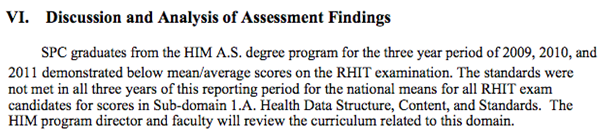

6) Discussion and Analysis - defined per PLO. The discussion and analysis section details the comparison between the current year's assessment results and the established thresholds set in the criteria of success section of the document. This information is used to drive change and improvement in the program by highlighting the learning outcome areas that do not meet established thresholds and establishing appropriate action plans.

Sample Language:

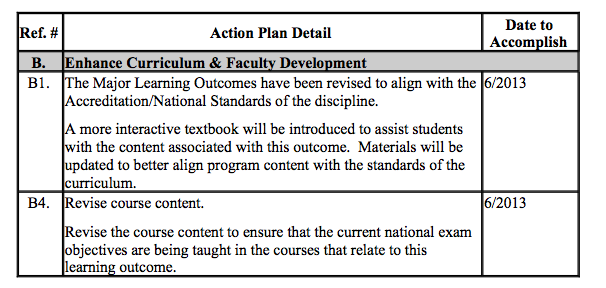

7) Action Plan and Timeline - defined per PLO and includes budgetary and planning implications. The action plan and timeline section is created by the program administrator to address issues identified in the assessment findings and drive program improvement.

Sample Language:

In the year following an APAR, program administrators submit the follow-up report to ensure that identified changes from the action plans are implemented and will be measured in the subsequent reports.

Sample action plan items:

General Education Assessment

In addition to the more traditional assessment process described above, the APAR captures the general education outcomes assessment process, which includes an institutionally-based online general education assessment that was implemented (in Spring 2010) to evaluate the quality of the general education curriculum, and identify areas for performance improvement by assessing students near their program completion. Students who complete 45 credit hours are invited to participate in an online 50-item assessment. The intent of this enhanced assessment model is to evaluate the College’s General Education program and curriculum as a whole, rather than only looking at the competencies a student would acquire within a single course.

The three objectives in creating this model were to 1) Establish a psychometrically sound general education assessment model to assess students nearing the completion of their degree, 2) Utilize available technology in order to encourage student participation through a cost-effective online administration model that allows data to move seamlessly among systems, and 3) Utilize the results produced by this new assessment model to identify specific areas of SPC’s general education curriculum for continuous performance improvement.

As part of the test development process, the established five general education outcomes were operationalized in the creation of test specifications. Through the test specification process, items were either assigned to a lower or a higher level of cognitive complexity. Examples of lower cognitive complexity items include knowledge-based definitional items. Examples of higher cognitive complexity items include evaluation of sound and audio files to assess listening skills and the use of scenarios to assess critical thinking skills.

ETS® Proficiency Profile

(Formerly known as Measure of Academic Proficiency and Progress (MAPP))

The ETS® Proficiency Profile assesses student learning by measuring critical thinking, reading, writing, and mathematics in a single, convenient test. The ETS Proficiency Profile is designed for colleges and universities to assess their general education outcomes and inform teaching and learning. It has been selected by the Voluntary System of Accountability (VSA) as a gauge of general education outcomes.

SPC first administered the MAPP (now called Proficiency Profile) during the 2007-08 academic year. Students who completed the assessment included all program levels (AA, AS, BS).

This instrument is periodically administered and is one of several direct measures aligned to the Critical Thinking Student Learning Outcomes (SLOs) for the purpose of measuring the institutions’ progress towards increasing students’ ability to think critically, as part of SPC's Quality Enhancement Plan.

In fall 2011, after reviewing the direct measures aligned to the Critical Thinking SLOs with available data, SPC administered ETS’ Proficiency Profile (formerly the MAPP) in order to collect additional general education data from students close to graduation in the baccalaureate programs, as well as in the AA and AS programs.

Administration of the assessment was conducted completely online, accessible from any computer with internet access. For lower division, a list of eligible students was generated based on the number of credit hours completed (45-55) and then students were randomly selected to participate. They were notified of their selection via personal email addresses and the “course” in the ANGEL learning management system. Baccalaureate students were identified by enrollment in capstone courses. All BS/BAS students who were enrolled in a capstone course for their program (final culminating experience in the program) were notified of their eligibility to participate via personal email.

Comprehensive Academic Program Review

The Comprehensive Academic Program Review (CAPR) process is specifically designed to be a summative evaluation of the various academic programs at the College. It was developed to meet three objectives within the academic assessment process:

- To provide a comprehensive trend report that summarizes all elements of the program’s viability and productivity from a 360-degree perspective,

- To provide comprehensive and relevant program-specific information to key College stakeholders in order to make critical decisions regarding the continued sustainability of a program, and

- To provide program leadership a vehicle to support and document actionable change for the purposes of performance improvement.

The development of the CAPR was a multi-departmental effort and involved numerous academic programs as well as all administrative offices in the area of institutional effectiveness. The evolution of the CAPR process has intentionally remained dynamic allowing for changes and adjustments to measures, definitions, and types of attachments with each new program review. New revisions to the document are weighted between the best measures to describe and evaluate an individual program and the global impact of the revisions on future program reviews. SPC reduced the recommended program review timeline to three years to coincide with the long-standing, three-year academic program assessment cycle, producing a more coherent and integrated review process.

The report includes the following areas: an executive summary of findings, program description, program performance measures, program profitability measures, capital expenditures, academic outcomes, stakeholder perceptions, occupation trends and information, state graduate-outcomes information, and the program director’s description of program issues, trends, and recent success. To ensure the use of results, the program administrator and dean are required to provide an action plan for improving the performance of the program. A follow-up report on these results is required the following year. The CAPR process also includes a review of the completed report by the Academic Affairs Committee and the College-wide Strategic Issues Council (formally the President’s Cabinet), as well as shared with the individual program’s advisory committee.

Distance Education Assessment of Student Learning Outcomes

According to its definition of distance learning (see FR 4.8.1, FR 4.8,2, FR 4.8.3, or attached) academic programs at SPC fall into one of three categories: face-to-face, student choice, and online. All academic programs, regardless of modality, follow the same assessment process. A list of programs grouped by their modality has been attached.

Whereas face-to-face and online programs have courses that are offered only in the designated modality, student choice programs are built upon the foundation of providing courses in multiple modalities to best suit our adult population’s needs. Students do not have to choose a modality and stay within that course structure for the duration of their program, but rather can look at the course content, course schedule, or a variety of other personal factors and choose the course that meets their current needs, regardless of modality. Student choice programs are viewed as a single, integrated program and assessed as such.

Cross comparison of individual course modalities occurs mostly at the end of each semester by academic Deans. All course evaluations, Student Surveys of Instruction (SSI), results are made available to Deans, Administrators, and Faculty each semester. Trend comparison data between academic orgs for the past four semesters is attached. Deans also have access to the business intelligence tool, “SPC PULSE BI,” to gather individual course success rates and enrollment rates on an ongoing basis. Trend data for Spring 2012-13 academic year per academic org is attached. These comparisons are often used to identify the need for a transition in the number of sections of a course being offered in a particular modality.

Supporting Documentation

In order to preserve the integrity of the supporting documentation in case of updates occurring between the submission of this document and the review, the narrative above links to pdf versions, whereas live links are included below.

- SPC Mission

- Active Program and Assessment Chart

- Outcome Assessment Review Report

- Oversight Groups

- Educational Outcomes

- Veterinary Technology Marketing Materials

- Human Services Program

- Nursing BSN Program Philosophy

- Educational Oversight Meeting Presentation

- Educational Oversight Meeting Minutes

- Cabinet Meeting Minutes

- BOT Memo

- Health Information Management Course Sequence Curriculum Map

- Baccalaureate Programs Start and First Graduation Chart

- General Education Test Specifications

- Quality Enhancement Plan Impact Report

- Comprehensive Academic Program Review (CAPR) Process

- Federal Requirement 4.8.1 Distance and Correspondence Education - Student Authentication

- Federal Requirement 4.8.2 Distance and Correspondence Education - Privacy

- Federal Requirement 4.8.3 Distance and Correspondence Education - Charges

- Distance Learning Definitions

- Program Modality List

- Trend Comparison Data

- Trend Data for Spring 2012-13 Academic Year per Academic Org